About

https://arxiv.org/pdf/1612.00593.pdf

As the price of Lidar hardware devices declines, 3D point cloud processing is expected to become increasingly important. Unlike image recognition, 3D sensors are useful in that they have absolute distance data. They are also expected to play a major role in the creation of data sets used for image recognition.

Model

These expectations have led to the development of deep learning models to process 3D point clouds. One of the models that have developed as interesting and with great performance is PointNet. PointNet is a deep learning model for the classification and segmentation of 3D point cloud data. It is interesting because its mechanism is very unique.

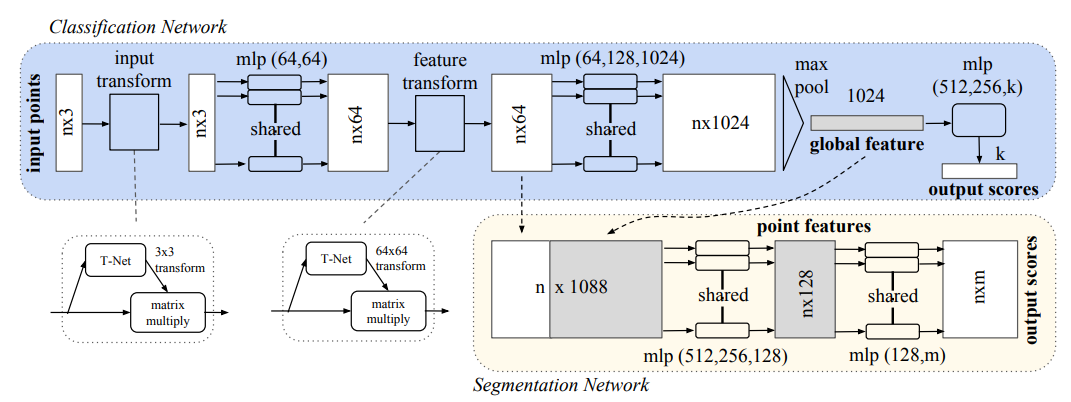

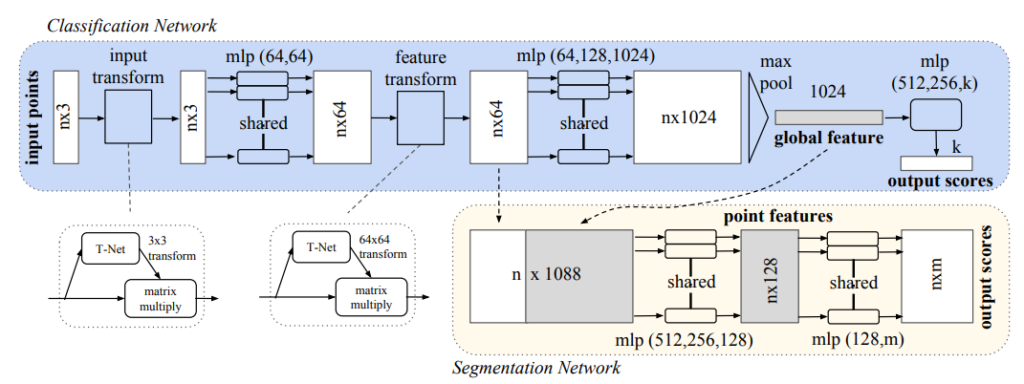

Here shows the model architecture of PointNet.

What is interesting about this network is that

- The number of 3d points is not fixed.

Since the number of 3d points is unknown before measuring points, the number of inputs should be flexible.

- Transform architecture

For getting good classification results of 3d objects, it looks better to see from a specific perspective. So, the model is going to transform 3d-points with 3 x 3 transformation matrix. (of course, this transformation is updated while deep learning training process.)

- Pooling global features from 3d-points-features

Global features are extracted using max-pooling on these 3d-points for summarizing information. Even if the number of input 3d points is not fixed, the number of extracted features is fixed

Assumed Disadvantages

- The Global feature is concatenated to all features on the segmentation network.

Since only one global feature is concatenated with all features, Considered wasteful in its handling of information.

- Extracting a global feature from all 3d-points causes losing local features.

This definitely happens. PointNet++ is going to solve this issue.