Note

https://hirokatsukataoka16.github.io/Pretraining-without-Natural-Images/

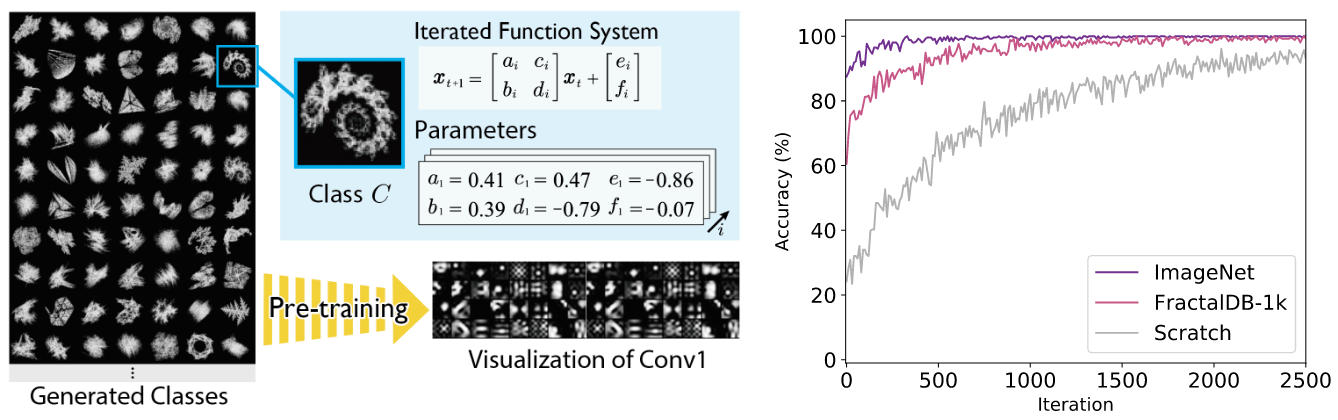

The paper introduces the concept of Formula-driven Supervised Learning (FDSL), which explores the use of convolutional neural networks (CNNs) pre-trained on automatically generated image patterns instead of natural images. These image patterns are created based on mathematical formulas, particularly fractals, following natural laws. Unlike Self-Supervised Learning, FDSL allows for image pattern generation based on various mathematical formulas and self-generated labels.

The FDSL framework is similar to self-synthetic image learning, but in addition to self-generated labels, the FDSL framework allows for the generation of image patterns based on various mathematical formulas. Also, unlike pre-training on synthetic image datasets, datasets under the FDSL framework do not require pre-definition of object categories, surface textures, lighting conditions, and camera viewpoints. In the experiments section, optimization of dataset composition is investigated, including category/instance growth, patch rendering, image coloring, and training epochs.

While CNNs pre-trained with FractalDB (a database devoid of natural images) may not consistently outperform models trained on human-annotated datasets, they can surpass the accuracy of ImageNet/Places pre-trained models in some scenarios. Moreover, FractalDB pre-trained CNNs perform well on auto-generated datasets based on FDSL, like Bezier curves and Perlin noise, as these synthetic datasets align with the fractal geometry commonly found in natural objects and scenes. The use of FractalDB for pre-training offers unique features in visualizing convolutional layers and attention mechanisms.