About

I found beautiful formulation that introduces inducing points and variational method on Gaussian Process in this paper.

Felix Leibfried, Vincent Dutordoir, ST John, and Nicolas Durrande “A Tutorial on Sparse Gaussian Processes and Variational Inference” https://arxiv.org/abs/2012.13962

There are some papers that explains inducing points in terms of matrix decomposition, but looks explanation is a bit different. It looks thought-provoking.

By the way, we can briefly see the idea of method with following article.

Assumption and Purpose

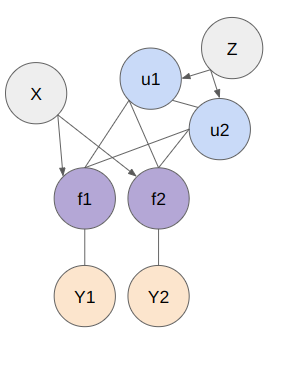

The true prior distribution p(u) is a Gaussian distribution with mean \mu_u and covariance matrix \Sigma_{uu} :

p(u) \sim \mathcal{N}(\mu_u, \Sigma_{uu})Here, the variational distribution q(u) is introduced, which is another Gaussian distribution with mean mu and covariance S_{uu}

\begin{align}

q(u) \sim \mathcal{N}(m_u, S_{uu})

\end{align}The purpose is to get marginalized distribution q(f).

\begin{align}

q(f) = \int p(f|u) q(u) \, du

\end{align}This marginalization looks a bit beautiful for explaining.

Conditional Probability

The conditional probability of f is gotten by general conditioning of multivariate Gaussian.

f | u \sim \mathcal{N}(\mu_f + \Sigma_{fu} \Sigma_{uu}^{-1}(u - \mu_u), \Sigma_{ff} - \Sigma_{fu} \Sigma_{uu}^{-1} \Sigma_{uf})But we would get another form using approximate distribution q (1) and q(f) (2) . The formulation (2) will be solved by its integrating analytically. The mean and covariance of (2) are

\mathbb{E}_q[f] = \mu_f + \Sigma_{fu} \Sigma_{uu}^{-1} \mathbb{E}_q[u - \mu_u]\\

= \mu_f + \Sigma_{fu} \Sigma_{uu}^{-1} (m_u - \mu_u)\text{Cov}_q[f] = \Sigma_{ff} - \Sigma_{fu} \Sigma_{uu}^{-1} \Sigma_{uf} + \Sigma_{fu} \Sigma_{uu}^{-1} \mathbb{E}_q[(u - \mu_u)(u - \mu_u)^T] \Sigma_{uu}^{-1} \Sigma_{uf}\\

=\Sigma_{ff} - \Sigma_{fu} \Sigma_{uu}^{-1} (\Sigma_{uu} - S_{uu}) \Sigma_{uu}^{-1} \Sigma_{uf}and we can summarize like follows:

f \sim \mathcal{N} \left( \mu_f + \Sigma_{fu} \Sigma_{uu}^{-1}(m_u - \mu_u), \Sigma_{ff} - \Sigma_{fu} \Sigma_{uu}^{-1} (\Sigma_{uu} - S_{uu}) \Sigma_{uu}^{-1} \Sigma_{uf} \right)Therefore it shows how it corrects the posterior distribution, the formulation makes things clearer for me. Namely, the mean of the new distribution is the predicted mean of the original Gaussian process plus a correction term that is scaled by the difference between the mean of the induction point and the mean of the prior distribution.